Recurrent Neural Networks

Recurrent network is a kind of artificial neural network containing loops, allowing information to be stored within the network.

Introduction

In my previous blog, we discussed briefly artificial neural networks and their types.

A recurrent network is a kind of artificial neural network containing loops, allowing information to be stored within the network. The output from the previous step is fed as input to the current step within the network. It uses sequential or time series data.

Recurrent Neural Network is derived from feed-forward neural networks. RNNs can use their internal state (memory) to process variable-length sequences of inputs. This makes them applicable in deep learning and in the development of models that simulates human brains. Mainly used in speech recognition and natural language processing (NLP).

Why recurrent network is used? 💡

We know that in traditional neural networks, all the inputs and outputs are independent of each other. But when it is required to predict the next word of a sentence, the previous words are required and hence there is a need to remember the previous words. Thus, RNN came into existence, which solved this issue with the help of a Hidden Layer.

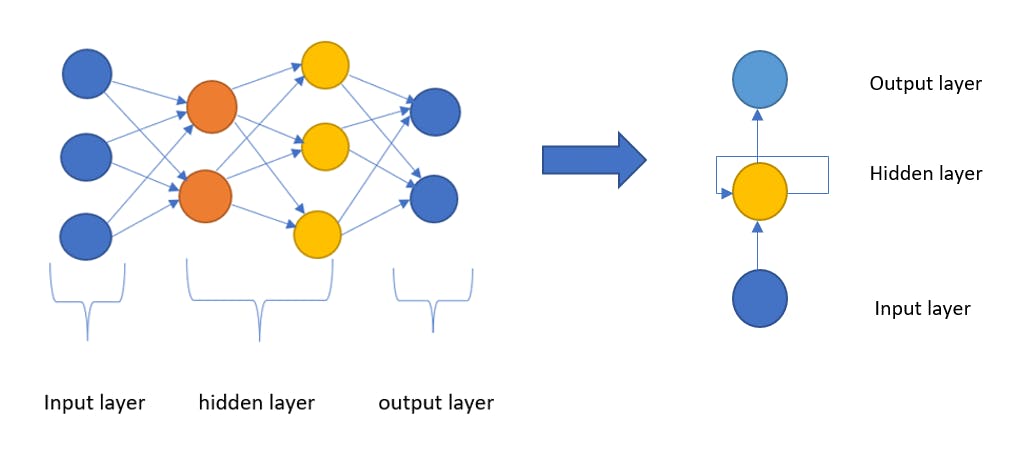

How feedforward network is converted to a recurrent neural network? 🛠️

In the Recurrent Neural Network principle, it combines all the hidden layers with the same weights and bias in a single recurrent layer. It’s like supplying the input to the hidden layer. At all times step weights of the recurrent neuron would be the same since it's a single neuron now. So a recurrent neuron stores the state of a previous input and combines it with the current input thereby preserving some relationship between the current input with the previous input.

Applications of RNN 🎌

Image capturing

Machine Translation

Speech Recognition

Language Modelling and Generating Text

Video Tagging

Text Summarization

Call Center Analysis

Face detection,

Other applications also

We will discuss some major applications here-

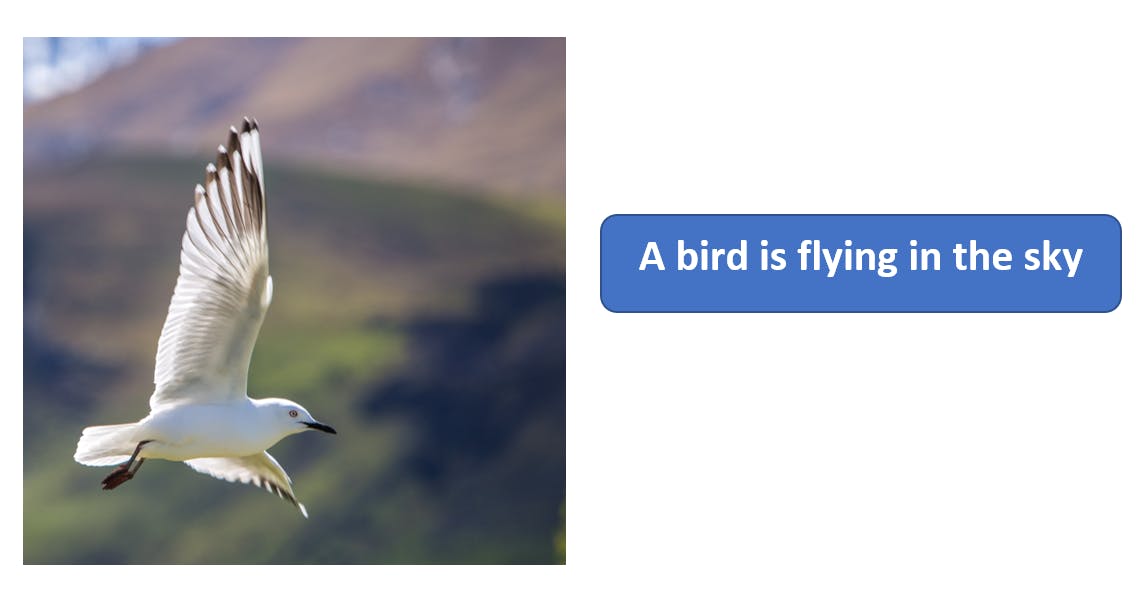

Image capturing:

RNN can detect the images and provide their descriptions in the form of tags.

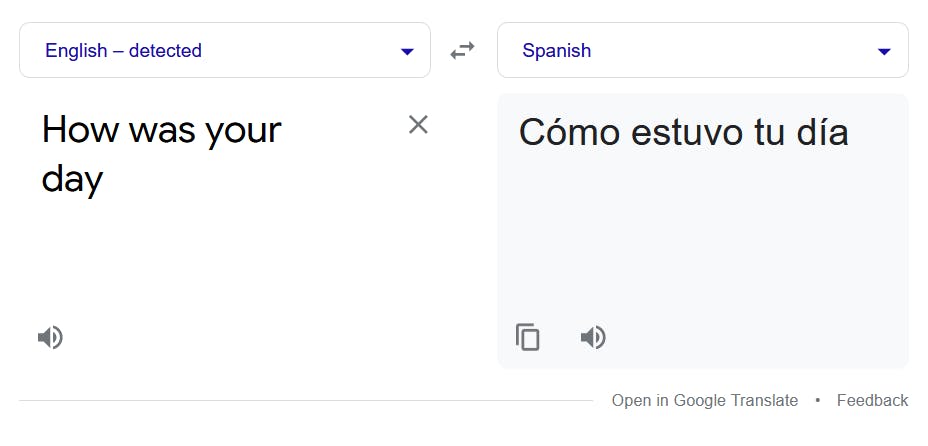

Machine translation:

RNNs can be used for translating text from one language to other. Currently one of the most popular and prominent machine translation applications is Google Translate. ECommerce application like Flipkart, Amazon, and eBay uses machine translation in many areas and it also helps with the efficiency of the search results.

Speech recognition :

Recurrent Neural Networks have replaced the traditional speech recognition models that made use of Hidden Markov Models. It can be used for predicting phonetic segments considering sound waves from a medium as an input source.

Sentiment analysis:

RNNs are most adept at handling data sequentially to find the sentiments of the sentence.

Text summarization:

RNN helps in summarizing content from literature and customizing them for delivery within applications that cannot support large volumes of text. For example, if a publisher wants to display the summary of one of his books on its back page to help the readers get an idea of the content present within, Text Summarization would be helpful.

Types of RNN

One to One -

It has a single input and a single output.

Uses in simple machine learning problems.

One to Many -

It has a single input and multiple outputs.

Example – image capturing.

Many to One -

It takes a sequence of inputs and generates a single output. Example – sentiment analysis.

Many to Many -

It takes a sequence of inputs and generates a sequence of outputs.

Example – machine translation.

Challenges faced by RNN 🤔

RNN is supposed to carry the information in time. However, it is quite challenging to propagate all this information when the time step is too long. When a network has too many deep layers, it becomes untrainable. This problem is called the vanishing gradient problem.

While training a neural network, if the slope tends to grow exponentially instead of decaying, this is called an Exploding Gradient. This problem arises when large error gradients accumulate, resulting in very large updates to the neural network model weights during the training process.

So readers, today we learned the basics about the recurrent neural network. I hope you enjoyed it. See you in the next blog. 😊